Will This Be The Last Time? Probably.

Yeah, I know. I got a new MacBook Pro after just getting a new one about fifteen months ago. Why? It was just too goddamned heavy. Yes, I appreciated the additional screen real estate, but since I use it connected to a wide-screen monitor when I'm home, the weight/benefit ratio just wasn't adding up.

I thought about getting the 15-inch Air (like Ben did, the same night), but I needed the one extra port on that came on the Pro.

I also knew that if I was going to replace the 16, with the upcoming threatened tariffs and the soon-to-be accompanying roll-back in my income, it was either now or never. I checked what Apple was offering for the trade-in and pulled the trigger. I'm glad I did. The weight difference is only a couple pounds, but it's amazing what a difference those few pounds make. It also allowed me to go back to the smaller messenger bag, an added benefit.

Not Untrue!

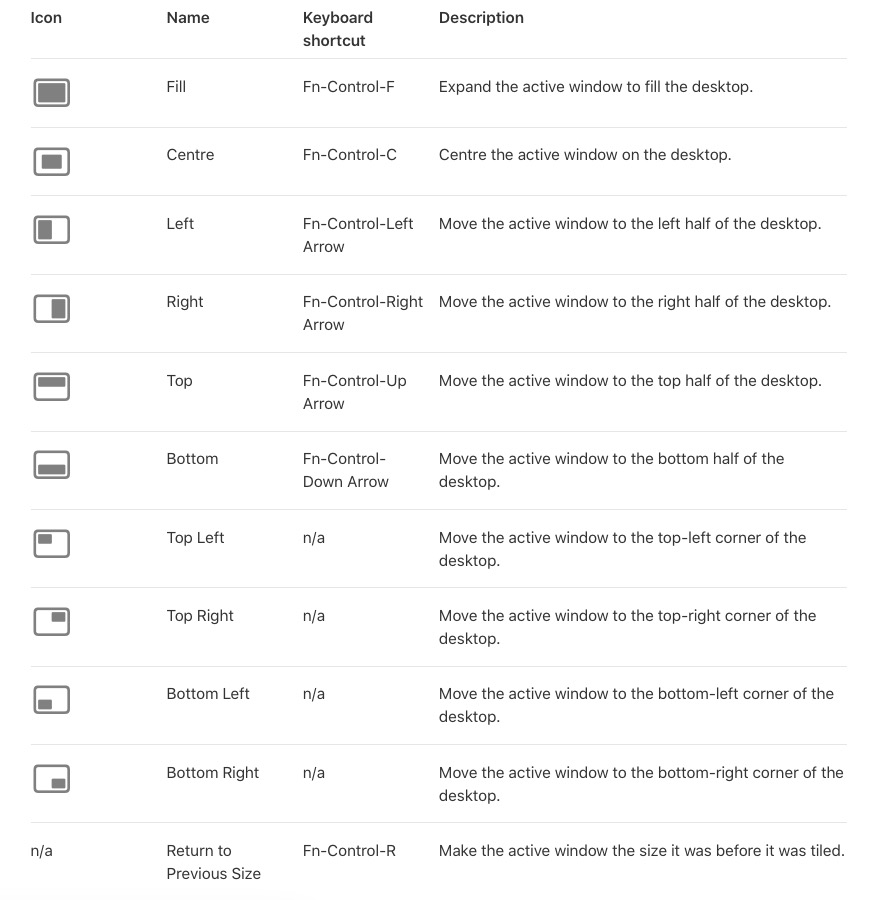

A Quick Window Tiling Guide for Mac Users on Sequoia

This is not "stupid" design. There was a deliberate decision to make the mouse useless while charging. But why?

Perhaps you're annoyed that you have to pause your work and let it charge, so you'll buy another one as a backup.

Also, if you own one long enough, eventually its internal battery will deteriorate, offering progressively shorter work time. If it had the chargeing port in the front, you could leave it always attached and still use it, just downgrading your wireless mouse to a wired mouse. It would be acceptable to some people. But this way you have to buy a new one.

Those are two potential chances for additional revenue with just one seemingly "stupid" design choice.

It's the same as putting critical components next to heat sources in their laptops. Apple doesn't do stupid things; it does asshole things.

As a Witting and Often Unwitting Beta Tester for Apple Software…

Just Puttin' this Out There

Fuck You, Apple!

Right?!

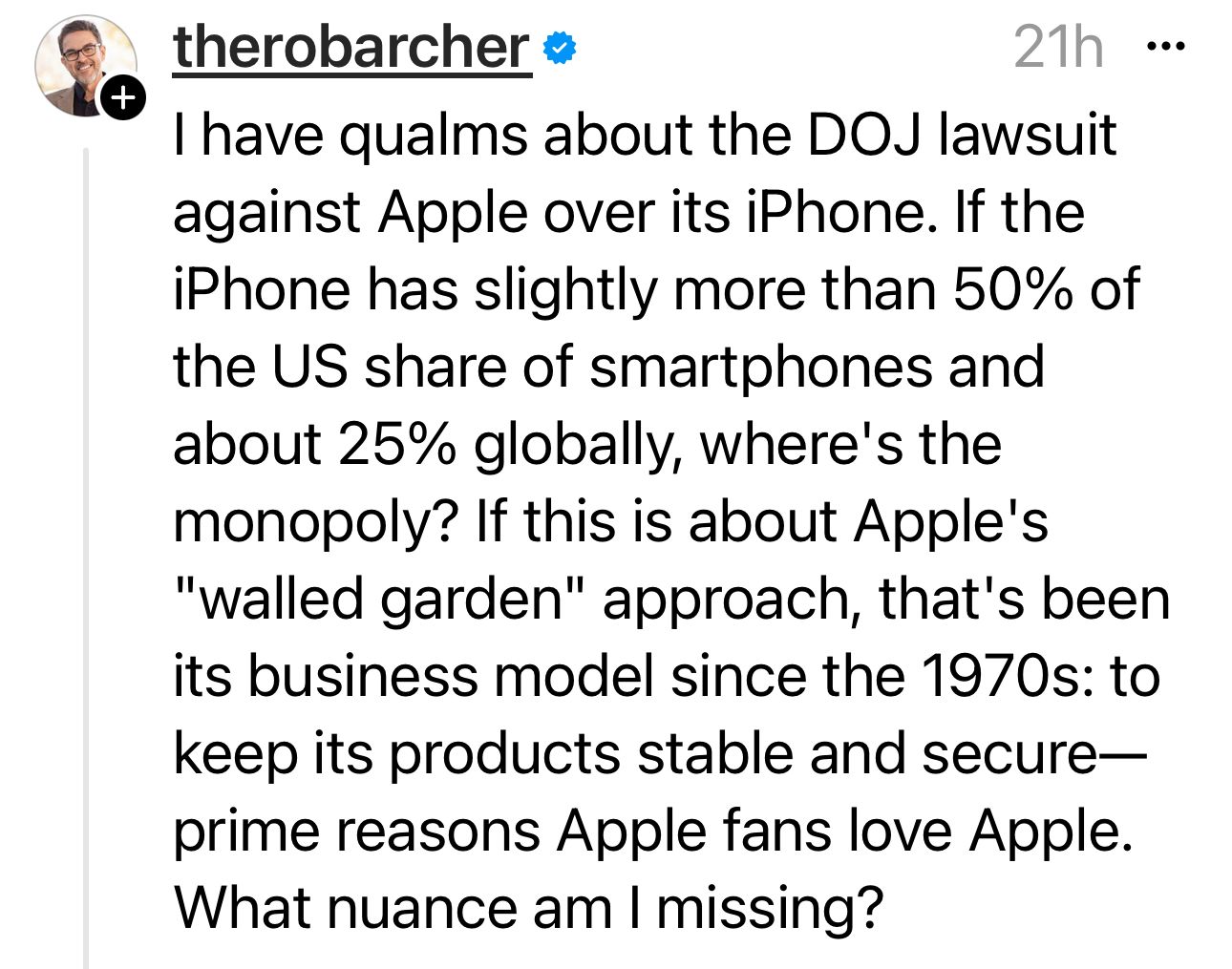

I Have Qualms

Obligatory Yearly Hand Held Photo Of Orion

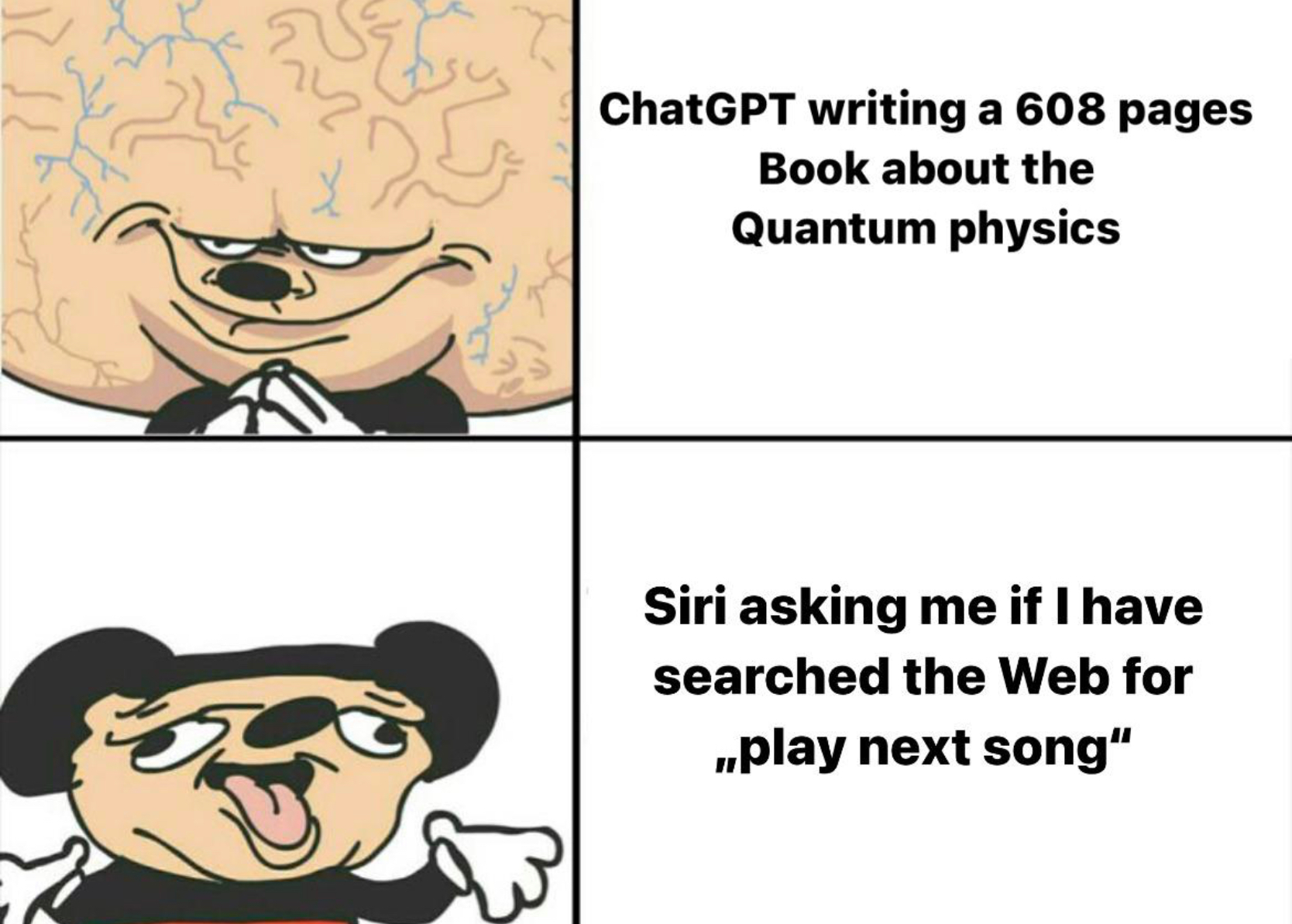

🤣 🤣 🤣

🤣 🤣 🤣

Happy Birthday

No Lie Detected

Oops! I Did It Again

If you remember, I was in a bit of a quandary when I replaced my MacBook Pro in 2021. 16 or 14-inch M1? That was the question. 16 ordered. Immediately cancelled because I decided it was too big and heavy. 14 ordered. Ten minutes later, 14 canceled and 16 reordered. Half an hour later 16 canceled and 14 reordered.

The 14 has been fantastic these past two years (where has the time gone?) and I really had no intention of getting rid of it until it reached the point where it would no longer accept OS upgrades, but after gazing over at Ben's 16-inch longingly because of the added screen real estate, I went online and checked prices. Using Ben's education discount (there are many advantages to being married to an educator) and a very generous trade in allowance being offered by Apple on my 14, it was kind of a no-brainer.

Yeah, I was still a bit concerned about the overall size and weight—not to mention the rather…lackluster…reviews the M2 machines had gotten, but I figured I'd give it a try (I had two weeks to return it, after all) and if it proved too unwieldy or performance was unsatisfactory, I'd send it back and return to my trusty 14.

Well, after only 48 hours, I am quite enamored of this machine and will be holding on to it.

Yeah, I had to order a new Timbuk2 bag to account for it's extra…girth…but that's a minor point.

Sonoma Update

It's been a few days since the last update, and most everything seems to be working fine. At least I've had no major issues like I did with the first and second betas. Apple itself must believe this release is stable enough to the general public testers, and I would have to agree.

There are still a few applications that won't work, oftentimes admitting up front that they aren't ready for this version of macOS, but they aren't anything I use on a daily—or even regular—basis.

Carbon Copy Cloner balks that it wasn't been tested with Sonoma, but still appears to run properly and I have full backups every morning like I always did before. Specialized utilities like OnyX flat out refuse to run, but even some of my more temperamental programs (VueScan and Celestia immediately come to mind) are behaving under Beta 3, whereas they were giving me grief under Beta 2.

Do you like that wallpaper? It comes from something called MyWallpaper, a very reasonably priced app that really lets you add a little wow to your desktop with hundreds of different animated backgrounds. The developer also offers a purely static wallpaper app with all the same images available for free.

What I'm Running These Days

Back Into The Fray

I had some issues with the first Developer Beta that I eventually overcame. Other issues popped up to the point that I gave up and wiped the drive, swearing the whole thing off until we got further into the development cycle.

Being a bear for punishment, when Beta 2 was released I jumped back on. As is often the case, things that were working perfectly fine in one beta get broken on a subsequent one. This was the case with Beta 2.

I have Sonoma installed in a separate container on the main hard drive. Since the drive isn't big enough to completely clone everything, when I migrated migrated my profile, I left Documents, Pictures, and Music in the original locations, being diligent when using Sonoma to just point everything I changed or added back to those original folders. It's worked like this forever with no problems. Forever until Beta 2. For some reason the original Ventura installation wasn't recognizing my account from Sonoma, even though it was identical (logged into the same Apple account, same name, same password, etc.). When I checked permissions on the Ventura folders it saw my "Mark" account as something new and was stuck in a loop "getting permissions." After several days I have up trying to fix it, wiped everything and happily went back to Ventura.

Well, Beta 3 (usually the point in the past where I've jumped in because it's the point where things start to stabilize) came out last week, and—being a glutton for pushment—I installed it again. I don't know if I did something different, or if the issue had simply been fixed, but my permissions errors were gone. I could access everything on the Ventura partition without issue, and several other glitches were seemingly rectified in this release as well.

Is it ready for release? Not by a long shot, but it's definitely further along. It's not an earth-shattering update to Ventura, but there are lots of nice little refinements that I find useful and I think most Mac users will appreciate.

Last Time Was By Mistake…

This Was an Accident

As you know, I've been participating in Apple's Public Beta programs for years. Early on (I think it was pre-Mavericks, actually) I learned the hard way that you do NOT install a beta on your main daily driver. Since that unfortunate mishap, I haven't stopped installing betas; I've just learned how to do it safely. Namely, by either installing on an external drive, or on a wholly separate partition on the main drive. The latter has been my preferred method for the last several iterations. And even then, I don't usually jump into the fray until Beta 3 or 4.

Well imagine my surprise when I saw the new MacOS Sonoma showing up as an available update in my System Preferences today. I guess you don't even need to be a member of the cordoned-off Public Beta program any more and—if you're foolhardy enough—to delve right into the Developer Beta Universe.

Okay, I thought. Why not? I'll create a separate partition and direct the installer to go there. Beta 1 is going to be fraught with danger, but it's safely tucked away from my critical data so what's the harm?

Well, it turns out this beta (and perhaps all developer releases?) doesn't follow the normal install routine. I clicked "upgrade" and it did not offer the customary screen prompting me where I'd like to install it. It simply rebooted and started installing.

Oh shit, I thought. Thankfully, I had last night's full-disk backup so I knew if I had to go back to Ventura I could. It wouldn't be pretty, and it would take several hours to reinstall the OS over the air and then restore all my data, but at least I had that safety net.

After about 20 minutes, the Sonoma install completed and brought me to the log in screen. "So far, so good," I thought. I logged in and everything came up normally.

Except…I had no internet connectivity. It showed I was connected via WiFi to my home network, but whenever I tried to go anywhere online I got notice that "You are not connected to the internet." I tried connecting via my phone's hotspot. I tried via the Cox shared network point. Nothing.

I turned off my VPN. I turned off the firewall. I turned off my ad blockers. Still nothing. Of course, there was not much info on the web about this yet, so after screwing around with it for about a half hour, I said fuck it, rebooted, wiped the drive, and two hours later Ventura was back up and running.

Yup.

Today's Apple Product Announcement

For me, the creepiness factor of Apple's "Vision Pro" is off the charts. It's not just the look, but all the personal biomatric data it collects and processes—ostensibly in the name of maintaining your personal security.

While it's still a far cry from the device described in the final paragraphs below, I can easily see it ending up there at some point in the not-too-distant future.

A post I made in 2017 (skip to the "Brain Waves" section toward the end if you want to skip all the stereo geek stuff):

The Future of High Fidelity

I was cleaning stuff out over the weekend and ran across a file folder full of clippings I'd kept from various sources over the years. I was a big hi-fi geek in high school and college, and one of the articles I kept that I'd always loved was a bit of fiction from the mind of Larry Klein, published July 1977 in the magazine Stereo Review, describing the history of audio reproduction as told from a future perspective. Since the piece was written many years in advance of the personal computer revolution, the author was wildly off-base with some of his ideas, but others have manifested so close in concept—if not exact form—that I can't help but wonder if many young engineers of the day took them to heart in order to bring them to fruition.

And I would be very surprised indeed if one or more of the writers of Brainstorm had not read the section on neural implants, if only in passing…

Two Hundred Years of Recording

The fact that this year, 2077, is the Bicentennial of sound recording has gone virtually unnoticed. The reason is clear: electronic recording in all its manifestations so pervades our everyday lives that it is difficult to see it as a separate art or science, or even in any kind of historical perspective. There is, nevertheless, an unbroken evolutionary chain linking today's "encee" experience and Edison's successful first attempt to emboss a nursery rhyme on a tinfoil-coated cylinder.

Elsewhere in this Transfax printout you will find an article from our archives dealing with the first one hundred years of recording. Although today's record/reproduce technology has literally nothing in common with those first primitive, mechanical attempts to preserve a sonic experience, it is instruction from a historical and philosophical perspective to examine the development of what was to become known as "high fidelity."

Primitive Audio

It is clear from the writings of the time that the period just after the year 1950 was the turning point for sound reproduction. For a variety of sociological, economic, and technological reasons, the pursuit of accurate sound reproduction suddenly evolved from the passionate pastime of a few engineers and Bell Laboratories scientists into a multimillion-dollar industry. In the space of only fifteen years, "hi-fi" became virtually a mass-market commodity and certainly a household term. In the late 1970s, the first primitive microprocessors (miniature computer type logic-plus-memory devices) appeared in home audio equipment. These permitted the user to program was was known as an "FM tuner," record player," or "tape recorder" to follow a certain procedure in delivering broadcast or recorded material.

For those who are not collectors of those antique audio devices, which employed "records" or "tapes," such terms require explanation. From its earliest beginning, recording employed an analog technique. This means that whatever sound was to be preserved and subsequently reproduced was converted to an equivalent corresponding mechanical irregularity on a surface. When playback was desired, this irregularity was detected or "read" by a mechanical sensing device and directly (later, indirectly) reconverted into sound. It may be difficult to believe, but if, say, a middle-A tone (which corresponds to air vibrating at a rate of 440 times per second) was recorded, the signatl would actually consists of a series of undulations or bumps which would be made to travel under a very fine-pointed stylus at a rate of 440 undulations per second. Looking back from a present-day perspective, it seems a wonder that this sort of crude mechanical technique worked at all—and a veritable miracle that it worked as well as it did.

The End of Analog

Magnetic recording first came into prominence in the 1950s. Instead of undulations on the walls of a groove molded in a nominally flat vinyl disc, there were a series of magnetic patterns laid down on very long lengths of of thin plastic tape coated on one side with a readily magnetized material. However, the system was still analog in principle, since if the 440-Hz tone was magnetically recorded, 440 cycles of magnetic flux passed by the reproducing head in playback. All analog systems—no matter what the format—suffered the same inherent problem (susceptibility to noise and distortion), and the drive for further improvement caused the development of the digital audio recorder.

Simply explained, the digital recording technique "samples" the signal, say, 50,000 tiles a second, and for each instant of sampling it assigns a digitally encoded number that indicates the relative amplitude of the signal at that moment. Even the most complex signal can be assigned one number that will totally describe it for an instant in time if the "instant" chosen is brief enough. The more complex the signal, the greater the number of samples needed to represent it properly in encoded form.

In the late Seventies and earl Eighties, digital audio tape recording proliferated on the professional level, and slightly later it also became standard for the home recordist. Many of the better home videotape recording systems were adaptable for audio recording; they either came with built-in video-to-audio switching or had accessory converters available.

The video disc, first announced in the late 1960s, progressed rapidly along its own independent path, since it benefited from many of the same technical developments as the other home video and digital products. B the mid 1980s a variety of video-disc player were available that, when fed the proper disc, could provide both large-screen video programs with stereo sound or multichannel audio with separate reverb-only channels. The fat semiconductor RV screen that was available in any size desired appeared in the early 1980s. It was the inevitable outgrowth of the light-emitting diode (LED) technology that provided the readouts for the electronic watches and calculators that were ubiquitous during the early 1970s. Later in the decade, giant-screen home video faced competition from holographic recording/playback technique. Whether the viewer preferred a three-dimensional image than was necessarily limited in size and confined (somewhat) in spatial perspective or a life-size two-dimensional one ultimately came down to the specifics of the program material. In any case, the two non-compatible formats competed for the next twenty years or so.

LSI, RAM, and ROM

By the late 1980s, the pocket computer (not calculator) had become a reality. Here too, the evolutionary trend had been clearly visible for some time. The first integrated circuits were built in the late 1950s with only one active component per "chip." By the end of the Seventies, some LSI (large-scale integrated circuit) chips had over 30,000 components, and RAM (random-access memory) and ROM (read-only memory) microprocessor chips became almost as common as resistors in the hi-fi gear of the early 1980s. ADC's Accutrac turntable (ca. 1976) was the first product resulting from (in their phrase) "the marriage of a computer and an audio component." The progeny of this miscegenation was the forerunner of a host of automatic audio components that could remember stations, search out selections, adjust controls, prevent audio mishaps, monitor performance, and in general make equipment operation easier while offering greater fidelity than ever before. As a critic of the period wrote, "This new generation of computerized audio equipment will take care of everything for the audiophile except the listening." Shortly thereafter, the equipment did begin to "listen" also, and soon any audiophile without a totally voice-controlled system (keyed, of course only to his own vocal patterns) felt very much behind the times. One could also verbally program the next selection—or the next one hundred.

"Resident" Computers

The turn of the century saw LSI chips with million-bit memories and perhaps 250 logic circuits—and the eruption of two controversies, one major and one minor. The major controversy would have been familiar to those of our ancestors who were involved in the cable-vs-broadcast TV hassles during the 197os and later. The big question in the year 2000 was the advantage of "time sharing" compared with "resident" computers for program storage.

Since the 1950s the need for fast out put and large memory-storage capacity had drien designers into ever more sophisticated devices, most of them derived from fundamental research in solid-state physics. The late 1970s, a period of rapid advances, saw the primitive beginnings of numerous different technologies, including the charge-transfer device (CTD), the surface acoustic-wave deivce (SAW), and the charge-coupled device (CCD), each of which had special attributes and ultimately was pressed into the service of sound reproduction processing and memory. The development of the technique of molecular-beam eipitaxy (which enabled chips to be fabricated by bombarding them with molecular beams) eventually led to superconductor (rather than semiconductor) LSIs and molecular –tag memory (MTM) devices. Super-fast and with a fantastically large storage capacity, the MTM chips functioned as the heart of the pocket-size ROM cartridge (or "cart" as it was known) that contained the equivalent of hundreds of primitive LP discs.

The read-only memory of the MT carts could provide only the music that had been "hard-programmed" into them. This was fine for the classical music buff, sicne it was possible to buy the complete works of, say, Bach, Beethoven, and Carter in a variety of performances all in one MT cart and still have molecules left over for the complete works of Stravinsky, Copland, Smythe, and Kuzo. However, anyone concerned with keeping his music library up to date with the latest Rama-rock releases or Martian crystal-tone productions obviously needed a programmable memory. But how would the new program get to the resident computer and in what format?

By this time, every home naturally had a direct cable to a master time-sharing computer whose memory banks were contantly being updated with the latest compositions and performances. That was just one of its minor facilities, of course, but music listeners who subscribed to the service needed only request a desired selection and it would be fed and stored in their RAM memory units. Those audiophiles who derived no ego gratification from owning an enormous library of MT carts could simply use the main computer feed directly and avoid the redundance of storing program material at home. Everyone was wired anyway, directly, to the National Computer by ultra-wide-bandwidth cable. The cable normally handled multichannel audio-video transmissions in addition to personal communication, bill-paying, voting, etc., and, of course, the Transfax printout you are now reading.

Creative Options

The other controversy mentioned, a relatively minor one, involved a question of creative aesthetics. The equalizers used by the primitive analog audiophiles provided the ability to second-guess the recording engineers in respect to tonal balance in playback. This was child's play compared with the options provided by computer manipulation of the digitally encoded material. Rhythms and tempos of recorded material could easily be recomposed ("decomposed" in the view of some purists) to the listeners' tastes. Furthermore, one could ask the computer to compose original works or to pervert compositions already in its memory banks. For example, one could hear Mongo Santamaria's rendition of Mozart's Jupiter Symphony or even A Hard Day's Night as orchestrated by Bach or Rimsky-Korsakov. The computer could deliver such works in full fidelity—sonic fidelity, that is—without a millisecond's hesitation.

Since Edison's time, the major problems of high fidelity have occurred in the interface devices, those transducers that "read" the analog-encoded material from the recording at one end of the chain or converted it into sound at the other. Digital recording, computer manipulation of the program material, and the MT memory carts solved the pickup end of the problem elegantly; however, for decades the electronic-to-sonic reconversion remained terribly inexact, despite the fact that it was known for at least a century that the core of the problem lay in the need to overlay a specific acoustic recording environment on a nonspecific listening environment. Techniques such as time-delay reverb devices, quadraphonics, and biaural recording/playback, which put enough "information" into a listening environment to override, more or less, the natural acoustics, were frequently quite successful in creating an illusion of sonic reality. But it continued to be very difficult to establish the necessary psychoacoustic cues. The problem was soluble, but it was certainly not easy with conventional technology. And the necessary unconventional technology appeared only in the early years of this century.

Brain Waves

It has long been known that all the material fed to the brain from the various sense organs is first translated into a sort of pulse-code modulation. But it was only fifty years ago that the psychophysiologists managed to break the so-called "neural code." The first applications of the neural-code (NC) converters were, logically enough, as prosthetic devices for the blind and deaf. (The artificial sense organs themselves could actually have been built a hundred years ago, but the conversion of their output signals to an encoded form that the brain would accept and translate into sight and sound was a major stumbling block.)

The NC (encee) converter was fed by micro-miniature sensors and then coupled to the brain through whatever neural pathways were available. Since rather delicate surgery was required to implant and connect the sensory transducer/converter properly, the invention of the Slansky Neuron Coupler was hailed as a breakthrough rivaling the original invention of the neural code converter. The Slansky Coupler, which enabled encoded information to be radiated to the brain without direct connection, took the form (for prosthetic use) of a thin disk subcutaneously implanted at the apex of the skull. Micro-miniature sensors were also implanted in the general location of the patient's eyes or ears. Total surgery time was less than one hour, and upon completion the recipient could hear or see at least as well as a person with normal senses.

What has all this to do with high-fidelity reproduction? Ten years ago a medical student "borrowed" a Slansky device and with the aid of an engineer friend connected it to a hi-fi system and then taped it to his forehead. Initially, the story goes, the music was "translated"—"scrambled" would be more accurate—into color and form and the video into sound, but several hundred engineering hours later the digitally encoded program and the Slansky device were properly coupled and a reasonable analog of the program was direcly experienced.

When the commercial entertainment possibilities inherent in the Slansky Coupler became evident, it was only a matter of time before special program material became available for it. And at almost every live entertainment or sports event, hi-fi hobbyists could be seen wearing their sensory helmets and recording the material. When played back later, the sight and sound fed directly to the brain provided a perfect you-are-there experience, except that other sensory stimuli were lacking. That was taken care of in short order. Although the complete sensory recording package was far too expensive for even the advanced neural recordist, "underground" cartridges began to appear that provided a complete surrogate sensory experience. You were there—doing, feeling, tasting, hearing, seeing whatever the recordist underwent. The experience was not only subjectively indistinguishable from the real thing, but it was, usually, better than life. After all, could the average person-in-the-street ever know what it is to play a perfect Cyrano before an admiring audience or spend an evening on the town (or home in bed) with his favorite video star?

The potential for poetry—and for pornography—was unlimited. And therein, as we have learned, is the social danger of the Slansky device. Since the vicarious thrills provided by the neural-code-converter/coupler are certainly more "interesting" than real life ever is, more and more citizens are daily joining the ranks of the "encees." They claim—if you can establish communication wit them—that life under the helmet is far superior that that experienced by the hidebound "realies." Perhaps they are right, but the insidious pleasures of the encee helmet has produced a hard core of dropouts from life far exceeding in both number and unreachablility those generated by the drug cultures of the last century. And while the civil-liberties and moral aspects of the matter are being hotly debated, the situation is worsening daily. It is doubtful that the early audiophiles ever dreamed that the achievement of ultimate high-fidelity sound reproduction would one day threaten the very fabric of the society that made it possible.

It's Not That Big

"That's what she said!"

There was nothing wrong with my venerable Apple Watch Series 6, other it was getting pretty banged up and the battery life was significantly less than what it had been when it was new, but I still wanted a new one. Ben had replaced his Series 5 with a Series 7 last year, but I couldn't justify the expense, especially since the 7 wasn't that big of an improvement over the 6—and I kept holding out hope that Apple would crack the elusive non-invasive glucose monitoring conundrum on a subsequent model. (As time passed it was abundantly clear that feature wasn't going to appear this year model either and sadly, still seems to remain several years away.)

When Apple revealed their new lineup last fall, I was immediately intrigued by their new aggressively styled, off-road 4×4 "Ultra" model. I knew it would be overkill for me, aimed as it was at a demographic of which I was most certainly not a part of, but I loved the styling. And yet, it seemed so. damn. big. And then there was the price.

If for no other reason than the waning battery life, I still wanted a new watch, so I opted for the 45mm Series 8 instead. After having a dark blue watch for years, I chose the basic aluminum finish this time, and it allowed me the freedom to use just about any color band with it. It responded to commands much more quickly than my old 6, would easily hold a charge throughout the day, and I liked the looks.

Ben had watch envy after my Series 8 arrived. He, however was also very intrigued by the Ultra. As you know, he's a big guy, and he really wanted to see how it looked on his wrist. About a week after I received my 8, we actually went to an Apple store and saw the Ultra in person.

Ben bought one, and I immediately regretted getting the 8.

As reviews and videos started rolling in on the Ultra, the more convinced I became that I'd made the wrong decision.

Luckily, since it was the holidays, Apple had extended their return window, so after vascilating back and forth for days and days on whether or not to return and exchange my 8, about three weeks ago, I took the 8 back to Apple and swapped it for the Ultra. I'd gotten a decent trade-in for my Series 6, so additional the financial sting wasn't that bad, and I have to say, I haven't regretted the purchase one bit.

The battery life is crazy! I often used to take my 6 "into the red" by the end of the day, preventing me from regularly using it for any sort of sleep monitoring, but the Ultra just keeps going and going. I can now consistently wear it overnight and only throw it on the charger when I jump in the shower the next morning to top it off.

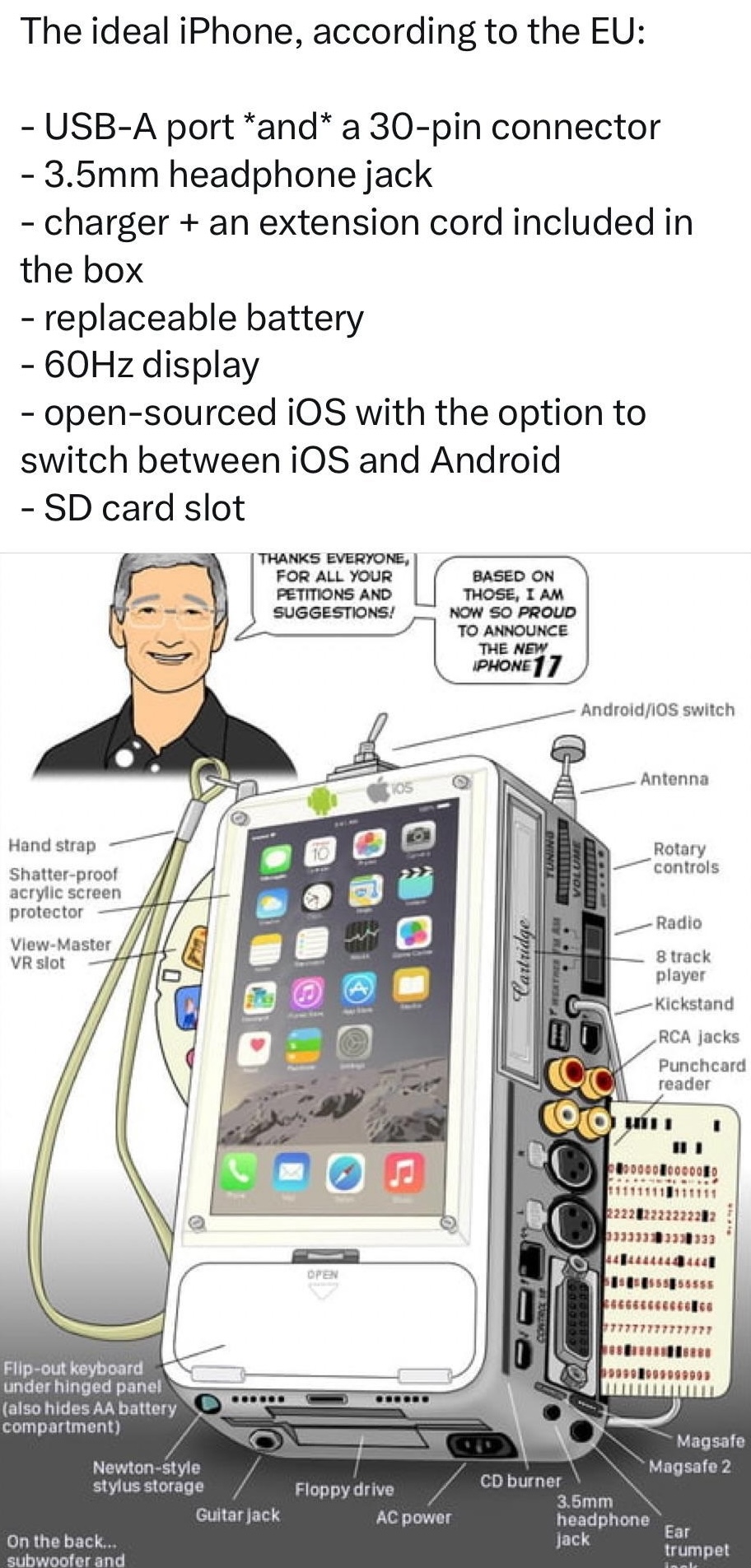

Some Apple Humor

Testing Ventura

Being the inveterate geek that I am, and attempting to find whatever joy I can in this pre-apocalyptic hellscape wherever I can, whenever a new Mac O/S comes on the scene I immediately jump on the public beta test bandwagon as soon as they're available and give them a spin.

I'm an Apple fan, but not an Apple fanboy (there is a difference), so there's no way I'm paying $99 a year (or whatever the going rate is these days) to be a part of the developer community just to get these releases the moment they become available. I'm satisfied to wait a few days (or in the case of the initial releases) a couple weeks to grab them as a public beta tester.

Several years ago I had a pretty bad experience with one of the betas—admittedly ignoring everyone's advice to not use them as your daily driver—that for a while soured me to the whole experience, but since then I've gotten smarter about trying them out, loading them on external drives so as to leave my main drive untouched.

Tiring of that approach, this year I decided to go a different route. One of the reasons I went with a 1 terabyte drive on my current Mac is so that I can partition part of it off and load the betas on that and have all the speed and accessibility that scenario affords while keeping my main drive fully sequestered.

All that being said, for the couple weeks I've played with Beta 1 and now Beta 2, and in my estimation, they're actually almost ready for prime time—at least for everything I use my Mac for. What's prevented me from throwing caution to the wind and loading the O/S as my daily driver is that there are still glitches—the most notable not being able to unlock the Mac with my watch and Messages not syncing between devices—that prevent me from doing something so foolish. And then there's the fact that some of the much-touted new features (like being able to use your iPhone as your webcam) simply refuse to work for me. In fact, I finally gave up trying to get these things to work and deleted the Ventura partition altogether, returning to using Monterey full time. Unlike my wild and crazy youth, I now prefer reliability over new and shiny. At least most of the time.

UPDATE 10pm: After watching a few more YouTube videos today and realizing all the things I'd missed in Ventura, I decided to give the new O/S one more spin. So this afternoon I created a new Ventura partition, re-enrolled my Mac in the beta program, and went about installing it again. It was only when the install completed and the machine rebooted that I realized that I had screwed up…big time. I had done this at work and had been distracted when I started the install and I never actually specified the Ventura partition, so—you guessed it—I installed it over my existing Monterey installation.

I didn't panic; I had a full Carbon Copy backup from 12 a.m. this morning, so I knew I could always wipe the drive, reinstall Monterey and then restore all my shit from the backup.

But I'm actually not in any great hurry to do that. All the little glitches I just got finished complaining about in the paragraph above had disappeared, and as of this writing, everything is working normally.

Two Mysteries Solved!

I've had two ongoing tech thorns in my side for months, both work-related.

The first is that all of a sudden one day Outlook stopped notifying me when new mail arrived. You know how you get the "ding" and the icon in the task bar displays a little envelope? Well, my work laptop stopped doing that. This had happened before about a year ago, and it cleared itself up when I upgraded to Windows 11.

After a few weeks, I just couldn't get into 11, so I wiped the whole machine and reinstalled 10. Again, Outlook notifications worked as expected until one day they didn't. I tried every suggestion I found online (including some dubious hacks to the registry) and yet nothing worked. I was ready to give 11 another go by this point, and installed it on top of the existing 10 like I'd done previously. This time, however, the Outlook notifications still weren't working.

I've lived with it like this for a while now, but I've missed several important emails because I never saw the notification, so something had to give. Once again I returned to the Google and one suggestion I hadn't tried was to simply create a new Outlook profile and see if that cleared things up.

Well smack my butt and call me a whore. It worked.

Sometimes the simplest solutions and the ones overlooked.

The other problem is that is my group chat at work, one of my colleagues is an Android user, so the whole chat defaults to SMS. A while back some texts were shown as failing to send, even though my blue-bubble companions were receiving what I'd sent just fine. (I should note this was only happening on my Mac through the Messages app, not when sending through my iPhone.)

With half the team being out the past two weeks, I was really only conversing with a green-bubble colleague most of the time, and ALL of the texts I sent him via my Mac were failing, but they worked fine from the phone.

After a lot of sleuthing, I finally fixed that by just toggling the SMS option in Messages on the phone.

Geek Stuff

So I Had to Send My AirPods in For Repair.

The left bud was hissing whenever I had it on noise canceling or transparency, so after a 15 minute chat with an online Apple "Have you tried resetting them?" representative, said rep agreed that even though they were out of warranty, repairs were covered under some extended program and they needed to be sent in.

I was a little hesitant because I didn't want to be without them for however long it was going to take, and quite frankly I wondered if I could just live with the situation until the AirPods Pro 2 came out in a few months which we all know I'll be grabbing up the moment they're available.

When the postage-paid return box arrived two days later however, I threw any misgivings I had to the wind, packed them up and sent them on their way.

I got a the next day that "Mark's AirPods Pro have been left behind." I'd completely forgotten I had that enabled, but also thought the feature was supposed to work in a much more timely fashion. But whatever.

I clicked their location in the Find My app.

They were already at the repair facility in California.

The next day I got an email informing me that they had been repaired and had been shipped out.

I checked the location again, and they were still at the same facility.

The following day I got notice that they'd been delivered to our PO Box, so I checked the location again.

It turns out they just replaced both buds outright; the old ones were in California waiting for their ultimate disposition.

And Another Internet Meme is Born

Disappointed

Like millions of other Apple aficionados, on Monday I watched the WWDC broadcast.

And like I do almost every time I watch one of these things lately, I came away disappointed.

While I am not in the market for a new Mac—and not a MacBook Air by any means—I was still very much looking forward to seeing the array of fun new colors that were supposedly slated for this major redesign of the iconic laptop.

With apple throwing a rainbow-hued paint bucked on the iMac last year, almost everyone was expecting them follow a similar design aesthetic and do the same thing—including white keyboards—with the new Air.

Sadly that did not happen.

What we got was the usual silver and space grey, with two new colors: starlight (kind of a champaign gold) and midnight (a dark, dark navy that seems to border on black). While a new solid black would be welcome (anyone remember the black MacBook from years ago?), we didn't get that; nor did we get the expected white keyboards across the line.

I only half-listened to the presentations on iOS. It's not my focus. I don't hold nearly the amount of passion and engagement with my iPhone as I do with my Mac. It's a tool, nothing more.

And we got MacOS 13, also to be known as…

Ventura? Really? I know it's just a name and next year it will be something else, but with all the inspiring named locations in California you'd think they'd have gone with something a little more interesting. What's next? MacOS Oxnard?

I'll admit the default wallpaper is rather pretty.

I personally liked the name that had been floated prior to WWDC…

But I get it. Something lumbering and well, extinct (even though the name refers to Mammoth Lake (or maybe Mountain)—and not the long-dead mammal—isn't exactly the image Apple is trying to project.

Regardless of the name, some of the features and applications (to be honest, a lot of which are playing catch-up with Windows as well as Apple's own iOS) touted in Version 13 are interesting, but nothing that reached out and grabbed me, demanding "You need to install this beta NOW."

That said, will I upgrade when the final version becomes publicly available? Or even a late-stage beta on a separate partition? Of course I will. And I'll upgrade my iPhone to iOS16 when the final version is available as well (I don't mess around with betas on my phone)—even though I'll no doubt continue to utilize only a small fraction of what it's capable of doing.